🧍♂️Biography

I received my M.S. degree in 2025 from the School of Computer Science and Engineering at Beihang University (BUAA), supervised by Prof. Yunhong Wang and Prof. Jiaxin Chen. Before that, I received my B.E degree in 2022 from Xiamen University (XMU). I am currently a researcher at SenseTime Research. My research interests include:

-

- Model Quantization and Pruning for Vision Transformers, multi-modal models, and large language models.

- Inference Acceleration for video generation models.

📖 Educations

-

- 2022.09 – 2025.01, M.S. Beihang University, School of Computer Science and Engineering, IRIP Laboratory.

- 2018.09 – 2022.06, B.E. Xiamen University, School of Information

💼 Work Experiences

- 2025.02 – Now, Researcher, SenseTime Research.

- 2024.06 – 2024.10, Intern, Bytedance.

- 2023.12 – 2024.04, Intern, Beijing Academy of Artificial Intelligence (BAAI).

📝Publications

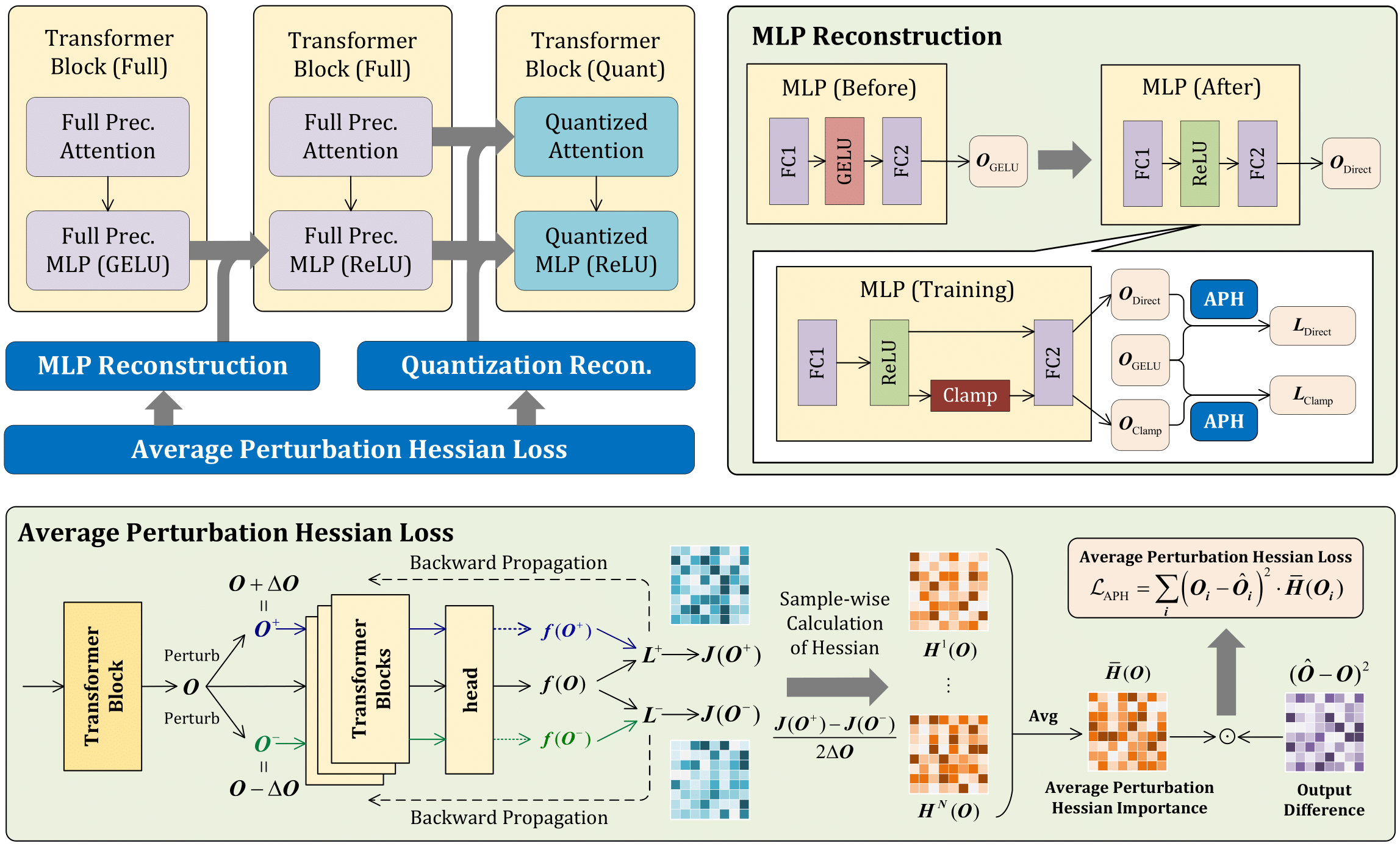

[CVPR 2025] APHQ-ViT: Post-Training Quantization with Average Perturbation Hessian Based Reconstruction for Vision Transformers [Github] [arXiv]

Zhuguanyu Wu, Jiayi Zhang, Jiaxin Chen✉, Jinyang Guo, Di Huang, Yunhong Wang✉

In this paper, we propose an importance estimation criterion based on the average perturbation Hessen (APH) for block reconstruction. Compared with previous methods, APH uses fewer assumptions and approximations, and can make the optimization process more stable. We further propose MLP-Reconstruction (MR), which significantly reduces the activation range during block reconstruction, and replaces the GELU activation function with ReLU to further improve the accuracy.

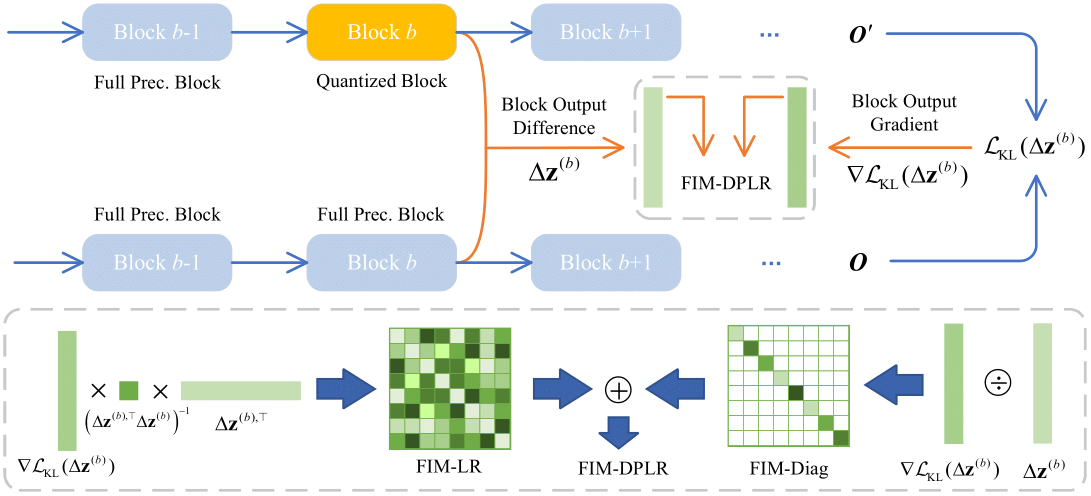

[CVPR 2025 Highlight] FIMA-Q: Post-Training Quantization for Vision Transformers by Fisher Information Matrix Approximation [Github]

Zhuguanyu Wu*, Shihe Wang*, Jiayi Zhang, Jiaxin Chen✉, Yunhong Wang✉

In this paper, we conduct a systematic analysis of the widely adopted Hessian-based quantization loss and identify its inherent limitations and inaccuracies. In light of the limitations, we propose a novel quantization loss formulation based on the Fisher Information Matrix (FIM). First, we theoretically establish the relationship between FIM and KL divergence. Leveraging this relationship, we develop four approximation schemes for FIM, where the diagonal plus low-rank (DPLR) approximation of FIM achieves superior performance across various ViT architectures and tasks.

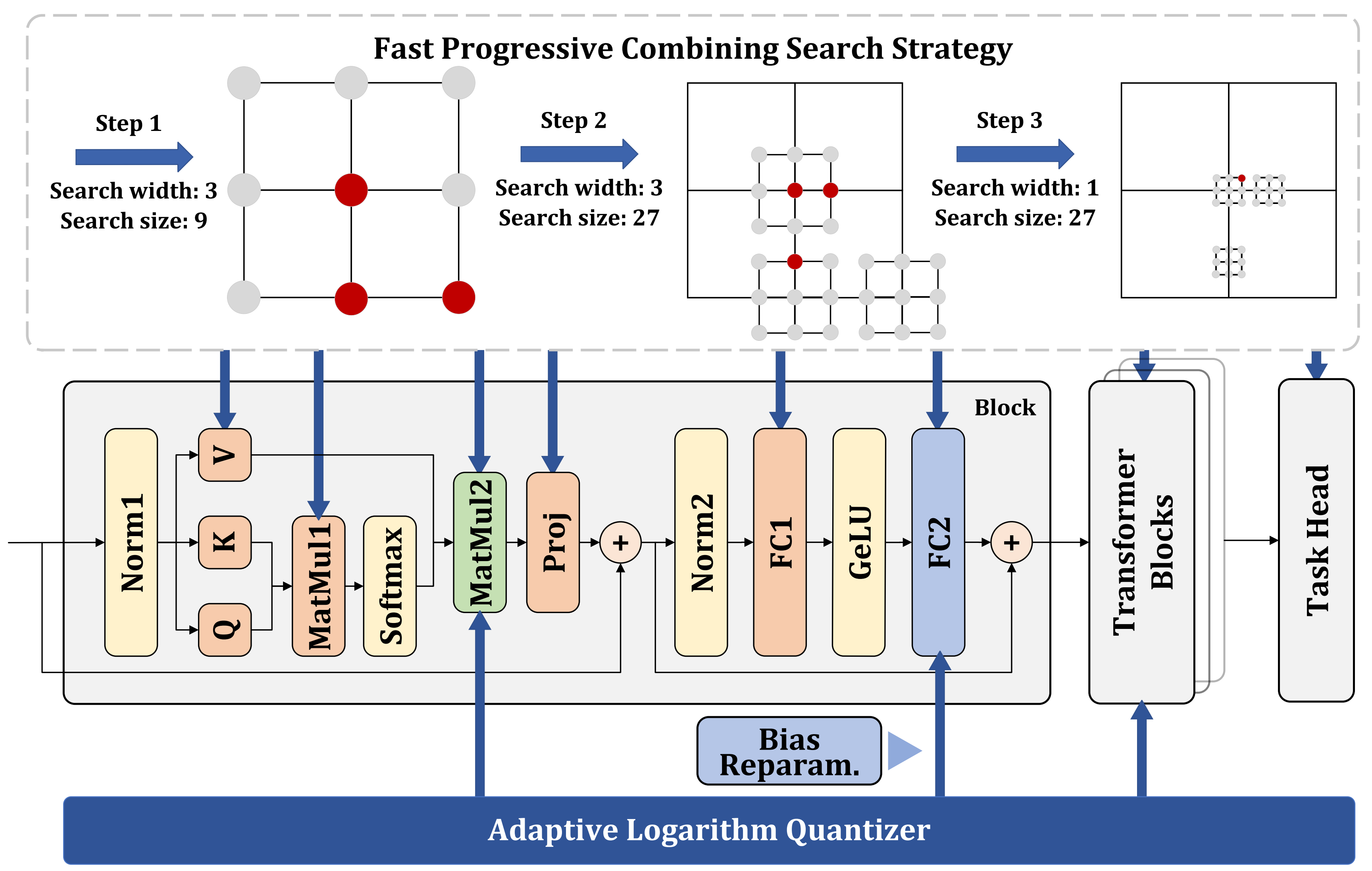

[ECCV 2024] AdaLog: Post-Training Quantization for Vision Transformers with Adaptive Logarithm Quantizer [Github] [arXiv] [bibtex]

Zhuguanyu Wu, Jiaxin Chen✉, Hanwen Zhong, Di Huang, Yunhong Wang

In this paper, we introduce a hardware-friendly base-adaptive logarithmic quantizer (AdaLog), which adaptively adjusts the logarithmic base to align with the power-law distribution of activations and simultaneously allows hardware-friendly quantization and de-quantization through lookup table and bit-shift operations. By incorporating bias reparameterization techniques, we successfully apply the AdaLog quantizer to post-GELU activation quantization. Additionally, we propose a Fast Progressive Combining Search (FPCS) strategy to accurately determine quantization parameters.

🎖 Honors and Awards

- 2025.01 Beihang University, The Outstanding Graduate Honor

- 2025.01 Beihang University, The Outstanding Master’s Thesis Award

- 2022.06 Xiamen University, The Outstanding Graduate Honor

- 2021.05 The 2019 ICPC Asia Yinchuan Regional Contest, Silver Medal

- 2021.04 The 2020 ICPC Asia-East Continent Final, Silver Medal

- 2020.12 The 2020 ICPC Asia Shanghai Regional Contest, Silver Medal

- 2020.10 The 2020 CCPC Weihai Contest, Bronze Medal

- 2019.10 The 2019 ICPC Asia NingXia Regional Contest, Bronze Medal